Sometimes you have to break a couple of eggs to make an omelet

Chaos engineering is simulating unexpected events on your systems and tests how they handle it. In short you could compare it to code testing but for systems. It can help you identify points of failure, harden your systems, help train employees and so on.

Chaos engineering is being used by some of the major tech companies and there are a lot of tools in development at the moment. In this blogpost I’ll take a look at chaosblade. This is an open source chaos toolkit being developed and used by Alibaba. For this example we’ll run the chaos experiments by using puppet. So we can easily launch experiments on the systems in our environment.

The steady state

The steady state is the state of your machines when they are running as they should. This can be a number of things such as configuration files, services, endpoints,…

Chaosblade does not generate such a steady state or you also can’t really define one here. You just start and stop experiments. So this is were puppet comes into play. As most of these things are components you define in your Puppet code. In this blog I’ll write a small piece of code that will gather these definitions. We’ll run an experiment and see if anything is impacted by it. We’ll break off the experiment and evaluate our state again.

Setup

We’ll run a few services inside a docker container and unleash an experiment on it. The complete code can be found inside this github repo: https://github.com/negast/puppet-steady-state

Next we’ll launch the compose file and install some modules.

docker-compose up -d –build

docker exec -it puppetserver /bin/bash

puppet module install puppetlabs-apt –version 8.3.0

puppet module install puppet-nginx –version 3.3.0

puppet module install puppet-archive –version 6.0.2

puppet module install negast-chaosblade –version 0.1.4

exit

docker exec -it ubuntu1 /bin/bash

apt-get update

puppet agent -t

After running the puppet agent the apache instance should be available at http://localhost:8085 in your browser.

Benchmarking

First we’ll benchmark the instance without cpu load. Luckily apache has a benchmark tool built in we can use.

docker exec -it ubuntu1 /bin/bash

ab -n 90000 -c 100 http://localhost/

First benchmarks

Time taken for tests: 18.008 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 6 | 3.2 | 5 | 37 |

| Processing: | 3 | 14 | 6.6 | 12 | 74 |

| Waiting: | 1 | 9 | 6.0 | 7 | 71 |

| Total: | 8 | 20 | 7.2 | 18 | 86 |

Time taken for tests: 18.798 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 6 | 3.9 | 5 | 50 |

| Processing: | 3 | 15 | 8.2 | 12 | 114 |

| Waiting: | 1 | 9 | 6.6 | 7 | 74 |

| Total: | 4 | 21 | 9.6 | 18 | 114 |

Time taken for tests: 17.998 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 5 | 3.2 | 5 | 39 |

| Processing: | 0 | 14 | 7.1 | 12 | 128 |

| Waiting: | 0 | 9 | 6.4 | 7 | 99 |

| Total: | 1 | 20 | 7.5 | 18 | 129 |

Time taken for tests: 19.682 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 6 | 4.2 | 5 | 37 |

| Processing: | 2 | 16 | 8.7 | 13 | 100 |

| Waiting: | 0 | 10 | 6.9 | 7 | 86 |

| Total: | 4 | 22 | 10.1 | 18 | 105 |

Time taken for tests: 15.650 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 5 | 2.7 | 4 | 45 |

| Processing: | 3 | 12 | 5.2 | 11 | 65 |

| Waiting: | 1 | 7 | 4.5 | 6 | 59 |

| Total: | 5 | 17 | 5.7 | 16 | 68 |

First benchmarks

Uncomment the puppet code in the in the site.pp to enable the experiment on the ubuntu1 container.

chaosexperiment_cpu { ‘cpuload1’:

ensure => ‘present’,

load => 99,

climb => 60,

timeout => 600,

}

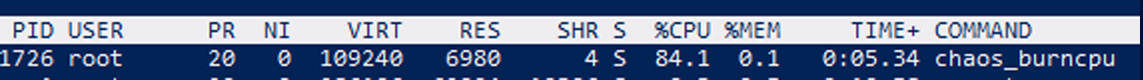

Now run the puppet agent again and you should see following process pop up:

You can also consult the blade cli tool to view your created experiments

root@ubuntu1:/# blade status –type create

{

“code”: 200,

“success”: true,

“result”: [

{

“Uid”: “cpuload1”,

“Command”: “cpu”,

“SubCommand”: “fullload”,

“Flag”: ” –cpu-percent=99 –timeout=600 –climb-time=60 –uid=cpuload1″,

“Status”: “Success”,

“Error”: “”,

“CreateTime”: “2022-01-03T09:59:57.3833659Z”,

“UpdateTime”: “2022-01-03T09:59:58.517777Z”

}

]

}

Next well run a couple of benchmarks again

Time taken for tests: 23.633 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 8 | 5.5 | 6 | 135 |

| Processing: | 1 | 18 | 9.5 | 16 | 149 |

| Waiting: | 1 | 11 | 7.4 | 9 | 145 |

| Total: | 2 | 26 | 11.8 | 23 | 190 |

Time taken for tests: 38.229 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 13 | 9.4 | 12 | 108 |

| Processing: | 2 | 29 | 16.1 | 25 | 171 |

| Waiting: | 0 | 17 | 11.8 | 15 | 139 |

| Total: | 2 | 42 | 20.4 | 38 | 191 |

Time taken for tests: 52.768 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 19 | 13.5 | 16 | 177 |

| Processing: | 0 | 39 | 22.9 | 34 | 338 |

| Waiting: | 0 | 22 | 15.9 | 18 | 259 |

| Total: | 1 | 58 | 29.7 | 52 | 369 |

Time taken for tests: 40.652 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 15 | 11.5 | 12 | 127 |

| Processing: | 3 | 30 | 18.7 | 24 | 299 |

| Waiting: | 0 | 16 | 12.7 | 13 | 267 |

| Total: | 4 | 45 | 25.2 | 38 | 302 |

Time taken for tests: 47.876 seconds

Connection Times (ms)

| min | mean | [+/-sd] | median | max | |

| Connect: | 0 | 18 | 12.3 | 16 | 111 |

| Processing: | 4 | 35 | 20.3 | 30 | 159 |

| Waiting: | 0 | 19 | 13.9 | 16 | 142 |

| Total: | 9 | 53 | 27.1 | 47 | 224 |

So we can see that the cpu load does have an impact but the service keeps being operational.

Stop service experiment

Next Let’s try an experiment that stop the apache process completely

blade create process stop –process-cmd apache2 –timeout 60 –uid stopp1

or in puppet code

chaosexperiment_process { ‘stopp1’:

ensure => ‘present’,

type => ‘process_stop’,

process_cmd => ‘apache2’,

timeout => 60,

}

This experiment makes it so that the apache process is virtually stopped for 60 seconds. Even when running additional puppet runs, the service state is not corrected. After the timeout the process resumes as normal. And we return to the steady state where the endpoint is available.

Results

So what can we learn from these two experiments? Firstly we can determine from the cpu experiment that when load gets too high this will impact the speed of our requests but necessarily bring down the whole system. The next step would be thinking about how we can react to unexpected loads to make a more durable environment. And we can again test the system using benchmarking and chaos testing. We could for instance add a load balancer, launch additional containers, …

From the process interruption we can see that if the system freezes our site is totally unavailable. Again we’ll have to think about how we could enhance the environment to react to this. We could add monitoring that may start or restart services, restart systems, …

Afterthoughts

So we did some minor experiments and did just one at a time. But you can launch multiple experiments at once and stress the system. For example we could simulate someone tampering with files and see if puppet fixes it, restarts services etc… We also learned more of the steady state and how running these experiments can help us think about expanding the steady state. We noticed that puppet does not react well to stopped services. So a thought would be to add a custom resource that also tests endpoints and we could let puppet react to it. This could make Puppet solve a lot of problems in your environment leaving you more time for testing and leaving you with a more robust system. We could also determine additional services that are missing in our puppet code. Expanding our knowing of our steady state.